Product Tank Leeds — November 2025 notes

Notes taken from Product Tank Leeds, Wednesday 19 November 2025.

Contents

- Talk 1 notes: Mark Palfreeman — Rejecting a culture of experimentation

- Talk 2 notes: Jennifer Riordan — Building your backlog: leveraging user research

- Talk 3 notes: John Steward — Sustainable AI for the design and delivery of greener products

- Patterns and themes across the talks

- Takeaways

- (My quick ideas on) What here might help in public healthcare?

Talk 1 notes: Mark Palfreeman — Rejecting a culture of experimentation

Background

- Senior business intelligence (BI) manager at Flutter (modern day owners of Paddy Power, Betfair, Sky Bet)

- Gambling industry grew 991% in 15 years; Sky Bet grew 35% year-on-year 2012-2018

- Industry focused on rapid feature development and welcome offers to grab market share

Problems

- Only 1 in 10 experiments succeed (12% win rate according to Optimizely)

- Creates tension between high growth expectations and low success rates

- Leadership reluctant to delay features for testing with uncertain outcomes

- Fear of failure common across teams - nobody wants to invest time in something that might fail

- “HIPPO” (highest paid person's opinion) rejection of experimentation culture

A solution: shift to a culture of learning

- Reframe success metric from "did we win?" to "did we learn?"

- This makes every experiment successful whether it wins or loses

- Changes language from delivering features to delivering learnings

- Example: instead of "2.3% conversion increase", communicate "football customers less bothered by timely content than horse racing customers"

- Uses "hierarchy of evidence" to show experimentation trumps expert opinion

Outcomes

- Removes pressure from experiment owners

- Makes teams less risk-averse

- Easier to communicate to leadership and stakeholders

- Time spent learning about customers is never wasted

- People need to believe in the approach for it to work (like the "Elf" Christmas spirit analogy)

Talk 2 notes: Jennifer Riordan — Building your backlog: leveraging user research

Background

- Product consultant at CGI with 10 years experience across multiple sectors

- Passionate about doing what's best for people not just following frameworks

Problems

- Teams use research to validate ideas rather than gain genuine insights

- Distinction between idea (thought/suggestion) and hypothesis (evidence-based starting point)

- Stakeholders often bring "opinionated branded ideas" that teams feel pressured to validate

The situation

- Stakeholder request: change filters on product list page

- Recruited 10 participants (mix of regular and new visitors, desktop and mobile)

- Research questions focused on: why people visit, how they find products, frustrations, what they'd do if stuck

Findings

- Nobody used the filters at all

- Instead, discovered valuable insights about: search returning no results, need for product categorisation, desire for product labels, more relevant images, "show personalised only" toggle

- Team nervous about contradicting stakeholder expectations

Action to pivot

- Took these learnings into backlog instead of original filter request

- Focused on delivering real value to users

Key principles

- There's no "wrong answer" in research - it's about finding truth

- Gather insights from multiple data sources (heat mapping, analytics, conversion data)

- Don't be afraid to adapt if users look in a different place

- Practice exploration rather than just validating delivery

- Listen to what users are telling you

Talk 3 notes: John Steward — Sustainable AI for the design and delivery of greener products

Background

- Head of Product at DEFRA (40 product managers, 165 digital products)

- Focus on sustainability as core departmental value

Context

- Digital sector would be 5th highest emitting country if it were a nation

- Equivalent to global air travel (now surpassed it)

- AI brings efficiency opportunities but has huge environmental footprint

- Water usage for cooling data centres and power consumption from non-renewable sources

- International Energy Agency predicts 10x increase in AI power consumption by 2026

The challenge

- How to leverage AI while ensuring net positive result - "juice worth the squeeze"

- Started with low baseline: most PMs "very unconfident" about AI

Approach

- Designed and delivered training for product managers

- Learned from channel partners and experts across sectors

- Built internal networks and communities of interest

- Gave specific guidance on being net positive

- Covered: sustainability, climate impacts, different tools, ML, LLMs, prompt engineering

Tools tested

- Notebook LM: converts content to podcasts

- Goblin Tools: free, designed for accessibility/neurodiversity, became go-to favourite

- Marvin: organisational memory for user research findings

Common tasks PMs use AI for

- Summarising text: affinity mapping, lengthy policy documents

- Strengthening thinking: positive adversarial approach

- Asking technical questions: reduces cognitive load on engineers

- Increased individual capacity by 10-30%

Bespoke tools built

(Referenced some wider gov stuff like Kuba’s proof of concept AI prototype maker.)

- Chatbot

- Form digitiser (reduced time from 14 hours to <1 hour per form)

- Prototype generator

Key learnings from experiments

- Off-the-shelf tools have limitations

- AI doesn't replace critical thinking or speaking to users

- Always need "human in the loop" for quality control

- Humans still needed to make code production-ready

Case study: AI farming grants prototype

- Built in 6 weeks, “side-of-desk” by full-time PMs (ie: done alongside the PMs’ main focus)

- Helps farmers navigate complex grant system (£3bn available annually)

- Text-based prompts give personalised guidance to human agents

- Results: potential £1m cost savings, carbon equivalent of 1,300 short-haul flights (short haul = London to Lisbon) saved, farmers better off

- "Triple win": organisation (faster/cheaper/better), users (better outcomes), planet (reduced emissions)

Resources created

- 10 principles for sustainable services (principle 5: design for efficient architecture in AI) [link]

- 50+ participatory workshops to validate

- Open-sourced guidance on GitHub

- Climate Product Leaders playbook: 38 best practices for climate-centred product management

Key advice

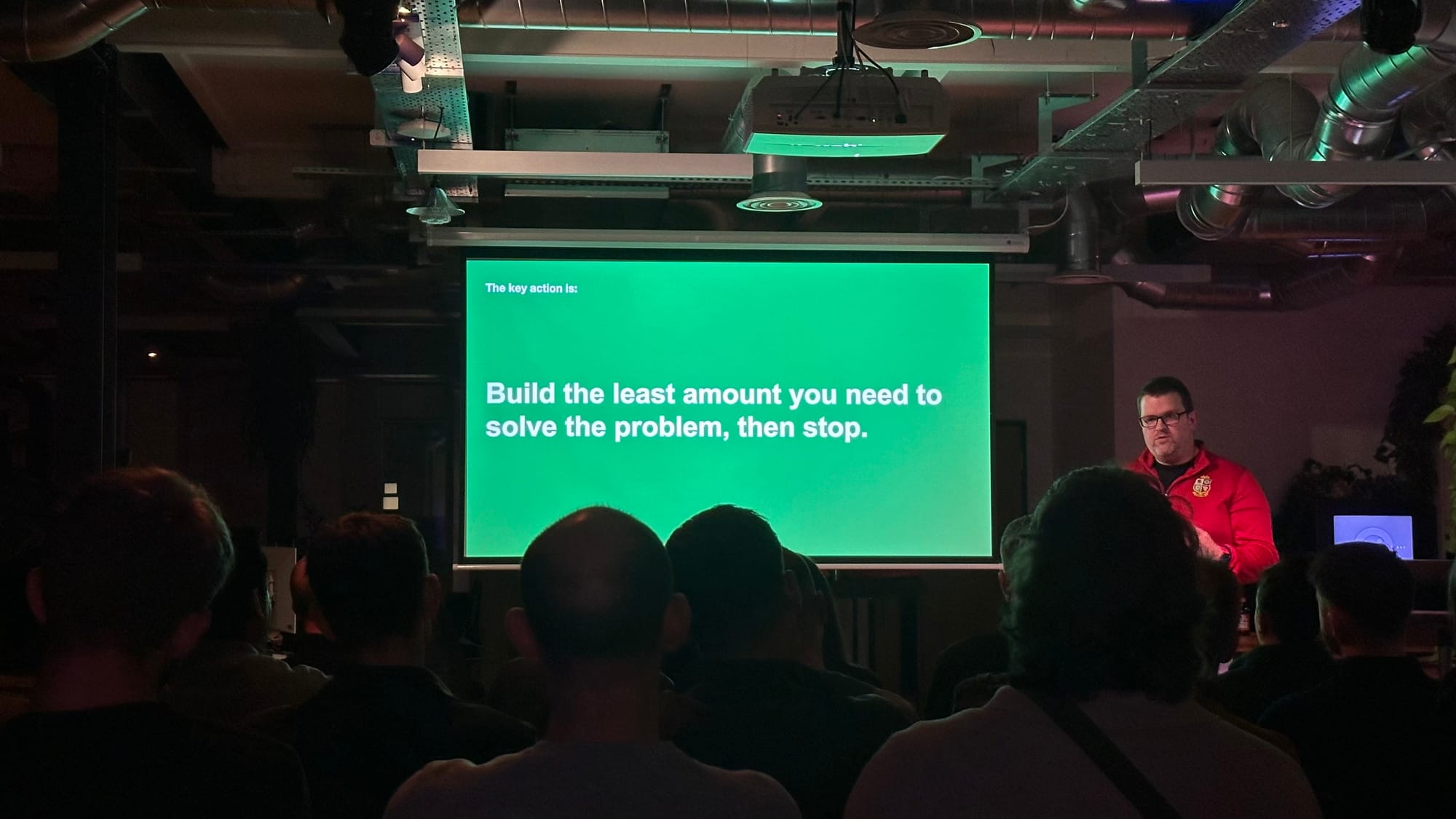

- Build the least amount needed to solve the problem, then stop

- It's not user needs versus sustainability - they go hand in hand

- Sustainability should be like accessibility: baked into how we work, not separate

- Product people make the calls on sustainability, not CEOs

- Think about "climate debt" like technical debt

[I left after the talks as I needed to get home so missed the post talk questions and discussion]

Patterns and themes across the talks

Mindset over process

- All three speakers: cultural and mindset shifts over tools and frameworks

- Mark: learning culture versus experimentation culture

- Jennifer: exploration versus validation

- John: sustainability as core principle, not add-on

Evidence trumps opinion

- Mark: hierarchy of evidence showing experimentation beats expert opinion

- Jennifer: research revealing what users actually do versus what stakeholders assume

- John: testing and validation of AI tools before deployment

Embrace uncertainty and failure

- Mark: reframing failure as learning

- Jennifer: no "wrong answers" in research, pivot when needed

- John: experimentation with AI tools, soft landings expected

Human-centred

- Jennifer: always talk to real users

- John: "human in the loop" essential for AI

- Mark: learning about customers is never wasted time

Empowering teams

- All three: give teams confidence, safety and frameworks to make better decisions

- The fears: Mark's fear of failure, Jennifer's stakeholder pressure, John's AI confidence gap

Practical over theoretical

- All three: concrete examples, tools, and frameworks

- …which showed impact

- Open-sourced guidance (John's GitHub resources)

Sustainable and lean delivery

- Jennifer: don't build what users don't need

- John: build the least amount to solve the problem

- Mark: learning prevents wasted development time

Challenge authority constructively

- Mark: challenging HIPPOs with evidence

- Jennifer: pivoting from stakeholder requests based on research

- John: questioning AI hype while finding practical applications

—

Takeaways

Start small and build confidence

- Run one experiment framed as "learning" not "winning" to shift team mindset

- Conduct one piece of user research with no predetermined outcome

- Test one AI tool (like Goblin Tools) for a low-stakes task

Create psychological safety

- Establish learning is the success metric, not shipping features

- Share research findings that contradict assumptions as positive outcomes

- Celebrate "failures" that generated valuable insights

Build frameworks and guidance

- Document your hierarchy of evidence for decision-making

- Create research discussion guides for your domain

- Develop principles for your products (such as DEFRA's open-source sustainability guidance)

Focus on capability building

- Run awareness/training sessions on user research methods

- Create AI literacy programmes for your teams

- Build internal communities of practice around experimentation and learning

Measure differently

- Track learnings generated, not just features shipped

- Measure team confidence levels (like DEFRA's benchmark survey)

- Calculate environmental impact of your digital products

Challenge with evidence

- Use real user behaviour data to inform backlog prioritisation

- Test assumptions before building

- Always include "human in the loop" for AI-assisted work

Keep things lean

- Build minimum needed to solve the problem

- Remove features that analytics show aren't used

- Consider sustainability implications of every new feature

Share and collaborate

- Open-source your learnings and frameworks

- Look to other sectors for inspiration (like DEFRA partnering with climate tech experts)

- Join wider communities like Climate Product Leaders

—

(My quick ideas on) What here might help in public healthcare?

(Not a definitive list, just a quick jive over breakfast.)

Leverage existing government guidance

- Use DEFRA's open-sourced sustainability principles and GitHub guidance [link]

- Reference the government service manual updates on AI [link]

- Learn from cross-sector government examples (DEFRA's forms digitisation, farming grants chatbot)

Address similar constraints

- Like DEFRA, healthcare faces headcount restrictions and budget pressures - AI can increase capacity by 10-30%

- John's "side of desk" approach shows innovation possible alongside BAU, if small enough

- Frame experimentation as learning to get leadership buy-in in risk-averse environments

Navigate complex stakeholder landscapes

- Healthcare has multiple stakeholders like gambling industry - use Mark's learning culture approach to align diverse groups

- Jennifer's pivot technique particularly relevant when clinical stakeholders have strong opinions

- Use hierarchy of evidence to challenge clinical HIPPOs constructively

Build trust through evidence

- Healthcare requires high trust - Jennifer's approach of multiple data sources (analytics, heat mapping, research) builds confidence

- Mark's learning metrics communicate value without overpromising

- John's "human in the loop" principle essential for patient safety

Focus on accessibility and inclusion

- John's emphasis on Goblin Tools (designed for neurodiversity) aligns with NHS accessibility requirements

- Consider DEFRA's approach to equity in grant access when designing patient-facing services

- Use AI to reduce barriers (like DEFRA's form digitisation) for patients with varying digital literacy

Sustainability as patient care

- NHS is major emitter - apply John's "build least needed" principle

- Reducing unnecessary features improves patient experience and environmental impact

- Frame sustainability as part of quality care, not separate concern

Work with your constraints

- Healthcare has significant data governance and privacy requirements - test AI tools thoroughly like DEFRA did

- Paper forms still prevalent in healthcare - DEFRA's digitisation tool (14 hours to less than 1 hour) directly applicable (if digitising is appropriate etc)

- Use Mark's approach: celebrate learning even when experiments don't ship due to regulatory constraints

Create learning infrastructure

- Build organisational memory like DEFRA's Marvin tool - prevents repeating research with same - for example - patient groups

- Establish communities of practice across trusts (something for our FFFU work?)

- Share learnings across NHS organisations to avoid duplication

Start with quick wins

- DEFRA's 6-week prototype approach suits NHS funding cycles

- Focus on areas where AI can reduce administrative burden (like grant guidance through to appointment guidance)

- Use "triple win" framing: trust benefits, patient outcomes, environmental impact

Address confidence gaps

- Create safe spaces to experiment without patient safety risk

- Healthcare teams may have similar AI confidence issues to DEFRA's initial "very unconfident" baseline

- Provide practical training on specific use cases relevant to healthcare

Balance innovation with safety

- Jennifer's "no wrong answer in research" with John's "human in loop" creates safe innovation framework

- Always validate AI outputs with clinical expertise before patient-facing deployment

- Use Mark's learning culture to enable teams to test without fear, while maintaining safety standards

Next Product Tank Leeds: late January 2026, Lloyds